A few months back, I decided to convert an existing .NET core application to run on Linux. As part of this work, I decided to run it inside a Docker container, which meant I could have my application running on Linux without worrying about setting up all of the dependencies on the server like .NET runtime, Apache, etc.

Now I fully realise that Windows containers exist and that I could have tried to get them to work. But the aim for me wasn’t to run on Docker, it was to run on Linux. Docker just came in handy because it meant I didn’t need to install all dependencies onto my Linux server – I could just fire up Docker.

Now, much like communism, Docker is pretty great--in theory. Once the process begins, however, you quickly realise just how many worms are inside the can you’ve just opened.

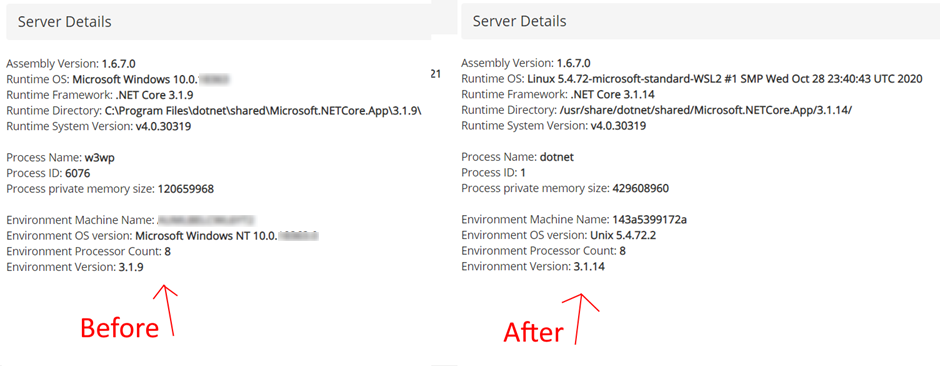

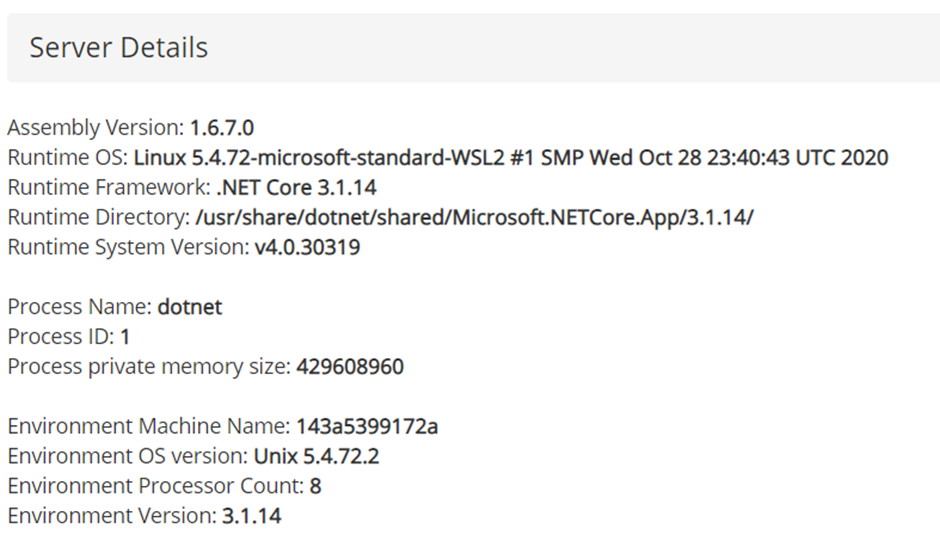

But unlike communism, in the end, this worked! Here’s the output from my web application showing some server details. Notice any differences?

So with that in mind, here's a list of issues that you should probably know about.

Table Of Contents

Building the Docker image

Image Layers and Caching

The first step was to package my application up inside a Docker image. Microsoft has some great articles on how to do this, but they are the usual only-works-for-a-simple-demo examples. My application is a bit larger and has multiple project files--hardly novel and hardly new. Fortunately, a programmer named Tometchy has a great blog post on how to set this up. After following his advice, I created my new Dockerfile and ran:

docker build src/myproject/Dockerfile... and it built! However... it was taking forever!

Unless you structure your Dockerfile in a very particular way, your NuGet package restores will always take forever to restore. Instead of using a local package cache, .NET will do a complete NuGet restore for every build. This means builds will take minutes instead of the usual seconds. So instead of doing this:

COPY . ./

Dotnet build

You can take advantage of Docker’s layer caching like so:

COPY src/myproject/myproject.csproj

COPY nuget.config ./nuget.config

RUN dotnet restore src/myproject/myproject.csproj \

--configfile nuget.config \

--packages packages

COPY . ./

RUN dotnet build

This means that every new Docker build won’t do a full NuGet restore—that will only happen when you change your project file. This meant I could build my images in a few seconds. Now that it was working, it was time to run my application.

Getting the app to start up

Localhost

When running an app inside Docker, localhost suddenly isn't localhost. The network has been virtualised! localhost doesn't mean what you think it means, it’s now referring to the Docker container itself, not the host. There is a simple fix for this - use host.docker.internal instead of localhost. This will work when developing locally, but when running on a server you’re probably better off specifying the full hostname and hope that DNS is set up correctly. Once I changed this, at least my application could connect to my Seq instance to start logging startup errors.

Support for third party libraries

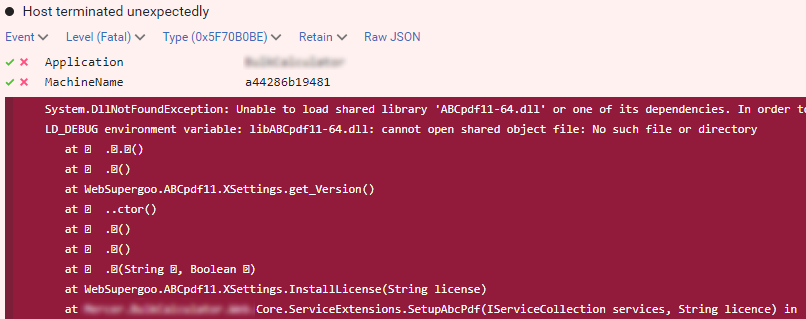

Once I fixed my references to localhost, the next problem that I had was not all of my DLL’s were loading properly. At startup I would get this error:

For PDF management I’m using a library named AbcPdf. It’s a great library with heaps of functionality that I’ve been using for years. There’s just one problem. AbcPdf isn't supported on Linux. There are just no Linux binaries, full stop. Sure, this was hidden inside their documentation somewhere, but the only way to find this out was to actually launch my application and see if it crashed at startup.

This was pretty confusing, because the code worked great on Windows. (I particularly like the stack trace with unreadable characters). But after a bit of googling on their website, I found this gem:

Windows only – not Linux or Xamarin

This was a problem. My only options were:

- Investigate other PDF libraries that have Linux support

- Refactor out all of the AbcPdf code and run it on Windows

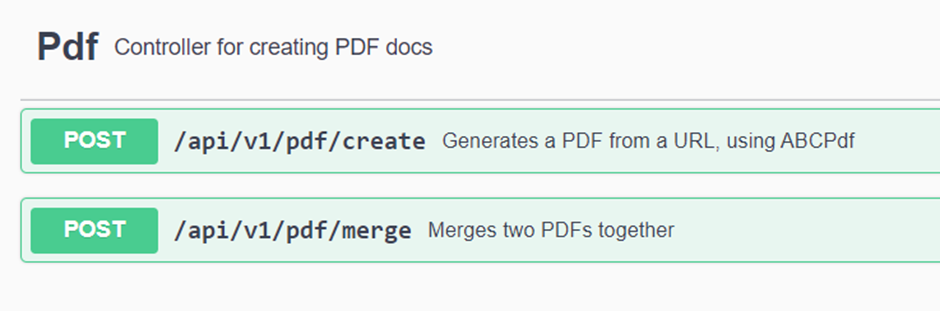

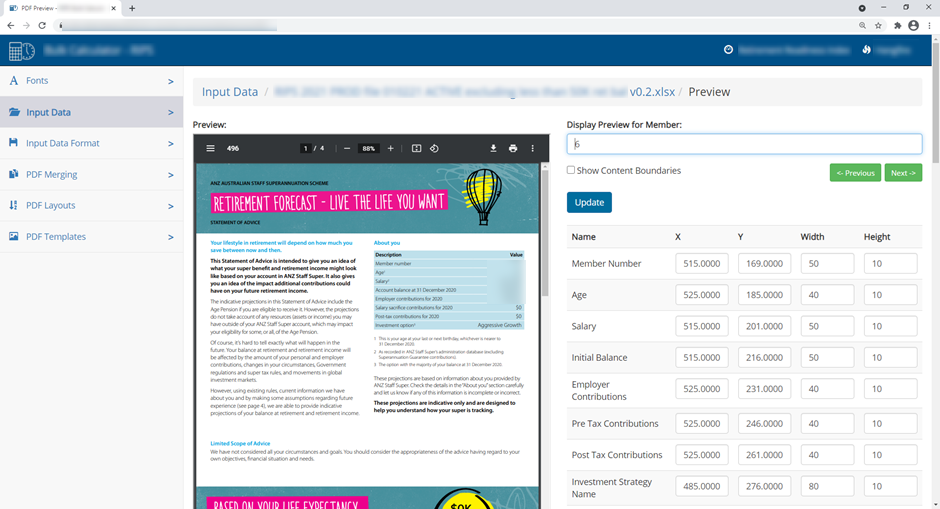

I chose Option 2. I refactored out all of the code to a separate application that I would run on Windows and slapped an API wrapper around it. This was a bit of work, but there wasn’t that much code there, and what was there could be pulled out pretty easily. After a day, I ended up with a nice API for creating and merging PDFs:

This was also quite nice as I knew there was another application that needed some PDF functionality, and now I had a nice and reusable API to call.

The next thing that I needed to do was to check that all of my other dependencies had Linux support. So here’s the full list of libraries that I’m using:

- Hangfire - long running tasks

- Serilog + Seq - logging

- Swashbuckle - swagger API

- AWS SDK - talking to S3

- EPPlus - excel file manipulation

- MongoDB

Fortunately, all of them have fantastic Linux support. Except for the one that didn’t.

Windows Authentication

The next problem was happening at startup:

System.InvalidOperationException: No authenticationScheme was specified,

and there was no DefaultChallengeScheme found.

The default schemes can be set using either AddAuthentication(string defaultScheme) or

AddAuthentication(Action<AuthenticationOptions> configureOptions).

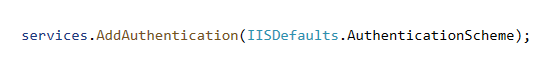

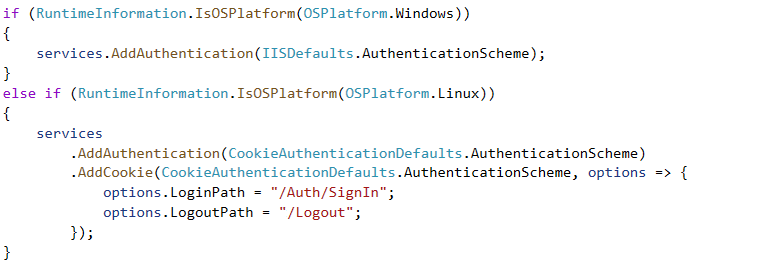

This didn’t make any sense. It’s the same code from Windows… but the more I looked at it, I realised that this was probably the culprit:

First off, the app isn’t using IIS, so the chances are, IIS authentication probably isn’t going to work. So after much googling, I found that while it is possible to get windows authentication working on Linux, to set it up you need to have access to a domain controller – something that I expect no developer would have--or should have! This is clearly not a practical solution. My solution was to move Windows auth out of the Linux server and move it to a different application (a topic for another post). But, to get you started, I found out I could have the application use IIS auth for when running under Windows, and a different auth for when running under Linux:

This is kind of cool, but really it probably should be a config that is set at startup, not determined by the code at runtime depending on the OS that it’s running on.

Runtime Errors

My application was now loading! I could click around and it was responding. It barely worked, but hey, it was loading. Now to start fixing all of the runtime errors.

System fonts

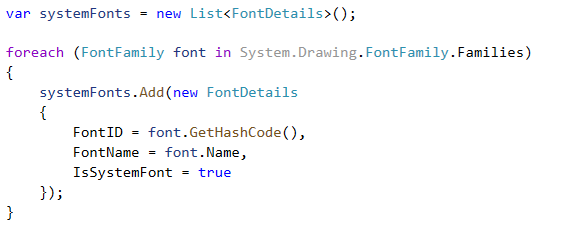

Inside my application I had a little bit of code that created a list of all of the system fonts installed on the server:

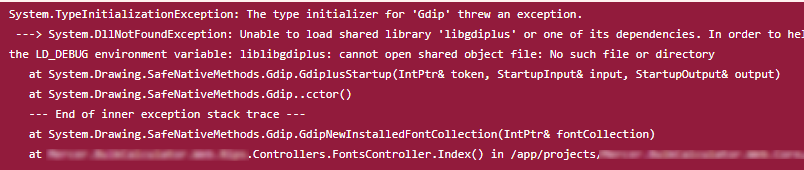

When this code ran, I got this error:

Not the most helpful error message I've ever seen, but needless to say, managing font files just doesn't work. I managed to find this github issue that had a few workarounds, but I never got around to trying them. I ended up moving the same code to the Pdf Api that I mentioned earlier.

This is the kind of thing that ended up being quite common when testing my application on Linux. Something causes the app to crash that normally works on Windows, you google it to find a couple of possible workarounds and try out a few.

Unspecified Culture

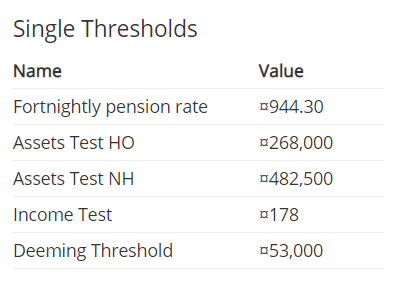

Notice anything weird going on here?

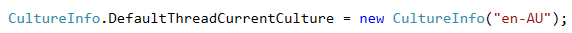

Ever seen the ¤ character before? Neither had I, but apparently it's the currency sign used to denote an unspecified currency. This means you need to set the culture inside your code instead of relying on the server’s culture:

Problem solved. That was a good one!

HTTPS and Certificates

Linux and Windows handle certificate trusting differently. One of the API’s that I’m calling had a certificate that used older ciphers. Apparently, in windows this was fine, but inside Linux, this was not fine and the SSL connection was rejected. I ended up having to modify the runtime openssl configuration inside the Docker container:

RUN sed 's/DEFAULT@SECLEVEL=2/DEFAULT@SECLEVEL=1/' \

/etc/ssl/openssl.cnf > /etc/ssl/openssl.cnf.changed && \

sed 's/TLSv1.2/TLSv1.1/' /etc/ssl/openssl.cnf.changed \

/etc/ssl/openssl.cnf.changed2 && \

mv /etc/ssl/openssl.cnf.changed2 /etc/ssl/openssl.cnf

Yikes. Isn't it great how docker simplifies things?

Timezones

When you make a call to fetch a list of Timezones, the list that is returned is completely different when running on Linux compared to Windows! Apparently this is because the list of time zones is returned by the OS... and of course the two do it completely differently. There is a library to work around this, but boy is it a doozy to catch during runtime.

Network Share Paths

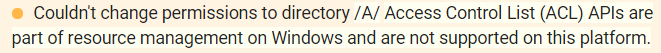

Writing to a Windows network share is suddenly much more difficult. My solution was to mount the network share on the host using samba (via smbclient), and then mapping this mounted share to a Docker volume, and then reading from that. It then finally works, after only adding two more levels of abstraction! But… can you change the permissions on the newly created file? Let’s try it:

Oh well, I guess not. This was annoying as it meant I couldn't change basic file permissions like making it readonly, or do something more advanced like change the owner of the file.

After a while you kind of get used to seeing messages that effectively say "this stuff works on Windows but not on Linux".

Case sensitive URLs

My URLs needed to be the correct case! Previously a url like /content/MyFile.js would load, even if the actual file on disk was stored as /content/myfile.js. However now that my app is running on Linux, case sensitivity is an issue. This wasn’t too much of a problem, but it caught me out a few times.

Other Things

There were a few other things that I needed to keep in mind.

Disk Space

When building Docker images, I would constantly run out of disk space. It’s really annoying and it's quite difficult to troubleshoot andwork out where all of the space is going and how to clean it up. Yes, you have the Docker prune command, but that wasn’t really working. I ended up having to impose some pretty strict “only use this much space” policies to my hyper-v image, which were really difficult to get right.

If you're not careful, the Docker images can become huge. One of the third-party libraries that I was using was putting 100 MBs of stuff in my application's bin directory. This meant every -Docker image push+pull would be using a lot more bandwidth than I expected. Easily done and difficult to spot.

Automated Builds and Deploys

My Automated build was suddenly very different. Instead of using the normal templated solution that I used for all of my other applications I suddenly need to have a custom build for Docker. The deploys are also completely different - better, MUCH better, but oh so different. Instead of pushing the package to the web server from your CI server, you pull it from the image hub directly from the web server. A very different way of thinking about it. Not something that I really thought through, and something that will need a bit of effort put in.

Security

You need to keep passwords and all other secrets out of your image. This means passing through all secrets as environment variables and making sure your application is loading them in at runtime. Nothing extraordinary here, but some more work that needed to be done to make sure it was working, and setting up an env.list file.

The Aftermath

So after all of those woes, I managed to get my application fully up and running:

I added a new page to dump out some runtime information, and it now displays the following:

This is pretty cool! This opens up a world of possibilities:

- My application runs faster.

- .NET on Linux performs better than on Windows.

- It uses less memory and is able to handle more traffic

- Deploys are vastly improved:

- They are much simpler - just pull down the Docker image and run it

- A lot faster - practically zero downtime as it only takes a second to startup

- Instant roll-back – running a previous version only takes a second

- Scaling out my application is much easier – just run the Docker image on another server

- My app can run easily on Azure or AWS

- My Docker image can be scanned for out-of-date dependencies or security problems

- Integration tests are now much easier to run.I can create a Docker compose file that sets up the entire environment (database, API’s, etc.) and runs my tests

- I can upgrade to a newer version of the Dotnet runtime without having to worry about what’s installed on the server

- I can run my app on a Kubernetes cluster

While moving to Docker wasn’t exactly a walk in the park, now that I have that knowledge moving my other applications won’t be nearly as difficult.

Workshops!

Great job on reading this far! If you're interested in this kind of stuff I'm currently running two different workshops on similar topics:

- Optimising your code with Visual Studio's Profiler

- Porting your asp dotnet application from framework to core

Thanks for reading and remember... you don't need permission to be awesome.